Table of Contents

This article is the first of a series of posts in which I will tell my experience with Aruba Cloud Pro, the IaaS solution made available by Aruba to create virtual machine data centers with a pay-per-use pricing model and an interesting series of features.

It's important to clarify that we're not talking about a service that can compete with competitors such as Google Cloud Platform, Amazon AWS or MS Azure, even if the approach is not too different from in terms of overall logic: a comprehensive management platform, entirely accessible via the web, that can be used to create, configure and deploy a series of cloud-based services that can be integrated with each other, as well as increased and/or decreased (as well as expanded and/or reduced) as needed.

More specifically, the product range includes:

- Cloud Servers, i.e. the Virtual Machines created on VMware or Hyper-V hypervisors with redundancy.

- Virtual Switches, switch-like devices that can be used to create private networks between the various servers.

- Public IPs, to grant external (WAN) access.

- Unified Storages, virtual storage units to have a shared space between the various Cloud Servers.

- Balancers, which can be used to distribute the workload balancing between two or more Cloud Servers (with identical configuration settings and stored data).

There are also a series of accessory services that can be used to support the products listed above, including: bare-metal backup, predefined templates, FTP access to upload customized virtual disks and / or export those of the created virtual machines, etc.

Infrastructure

Those available services allowed me to build a Data Center based on a typical edge-origin architecture featuring the following elements:

- 1 pfSense Firewall and VPN Server (one of the two Aruba Cloud standard firewall VM templates, the other one being Endian)

- 1 NGINX Reverse Proxy on a CentOS 7.x virtual machine

- 1 IIS Web Server on a Windows Server 2016 virtual machine

- 1 SQL Server Database on a second Windows Server 2016 virtual machine

- 1 NGINX Web Server on another CentOS 7.x virtual machine

- 1 MariaDB Database on a third CentOS 7.x virtual machine

As we can see, we're talking about a typical Windows + Linux hybrid environment, which I often like to adopt (and suggest to my customers) because it allows me to have the best of both worlds: .NET 2.x & 4.x apps and services on a full Windows architecture, LAMP/LEMP/MEAN stacks on Linux, and .NET Core (micro)services using Windows and/or Linux depending on the external packages availability.

Networking

When you deploy a virtual server, Aruba Cloud automatically assigns a public IP address and a network card directly connected to the internet, so that each server has direct access to the internet (and can also being accessed).

For obvious security reasons I've chosen to ditch such approach and adopt a WAN + LAN networking configuration orchestrated by the firewall: more specifically, I've configured all the public IPs to the firewall in order to make it the only VM directly accepting incoming connections from the WAN: I've then put all the other servers on a secured LAN so that they could communicate with each other without being exposed to the internet.

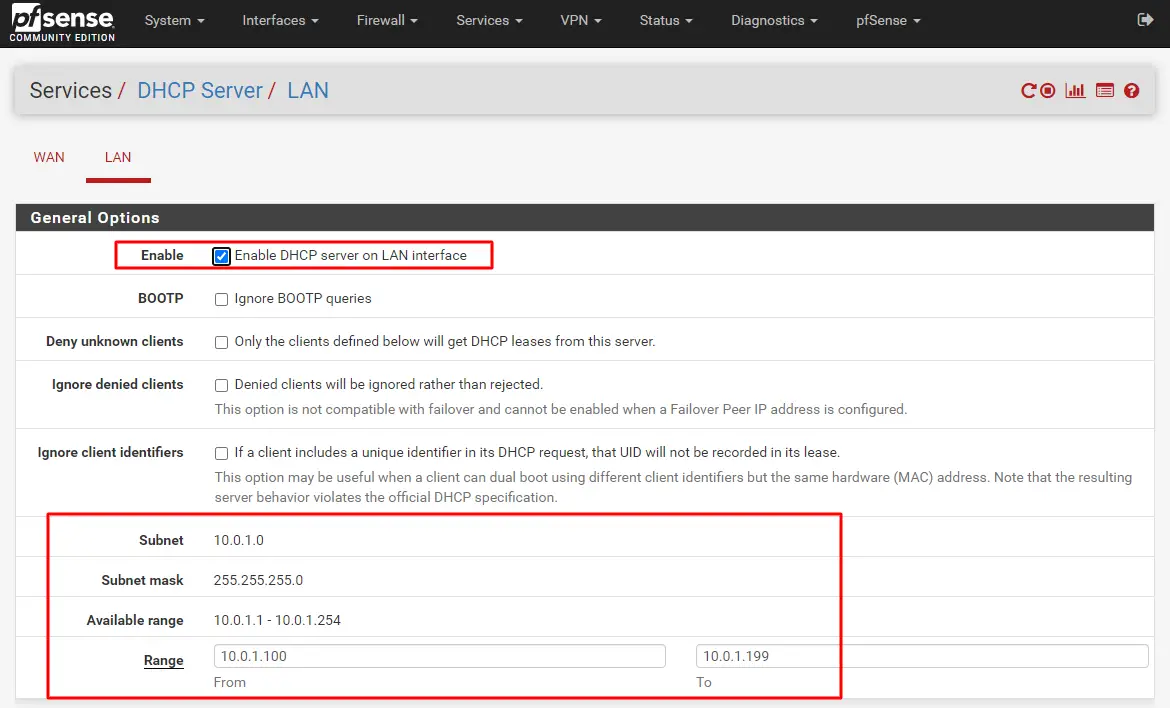

Implementing an internal LAN on Aruba Cloud was actually pretty straightforward thanks to a Virtual Switch, which serves this exact purpose; I just had to configure the LAN network on pfSense and activate its DHCP server so that it can act as a gateway and handle the whole subnet, as it can be seen in the screenshot below:

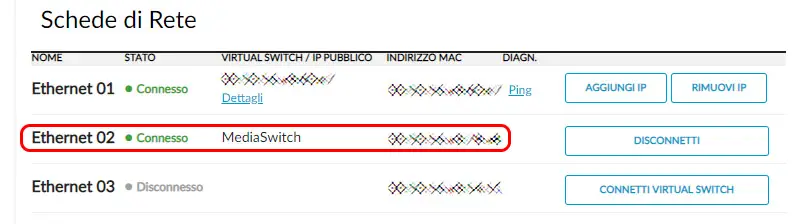

Once done, I could add a secondary Network Interface Card to each of my Cloud Servers to make them able to connect to the LAN Virtual Switch:

I've also set up the required inbound NAT and outbound NAT rules so that each server could still be able to access the internet, while being protected by incoming connections at the same time (see this post for further details); after doing all that I could finally disable (or remove) the default Network Interface Card pointing to the WAN network on all of the Cloud Servers to ensure that they couldn't be directly accessed from the public internet anymore.

External connections via VPN

Since pfSense natively integrates a VPN server feature, I took the chance to configure it to allow system administrators (including myself) to be able to securely connect to that LAN via a VPN client and access those servers using Remote Desktop.

To implement the VPN I've chosen the OpenVPN protocol, probably the most secure to date among those made available by pfSense, with a configuration designed for client-server mode. The configuration of the clients is extremely simple, since pfSense allows you to download the configuration scripts for each user, which allow you to automatically configure the client, as well as - for the less experienced - a customized file installer including the OpenVPN Client and all the settings needed to connect. Obviously neither the script nor the installer file autogenerated by pfSense contain the actual user credentials, which are essential for making the connection.

- pfSense - Setup and Configure a OpenVPN Server

Common issues and fixes

Although it was a rather easy configuration, there were some issues, mostly related to some specific problems of the various systems used; in this paragraph I've tried to summarize the most troublesome of them, hoping that the solutions I've found could also help other system administrators.

Windows File & Folder Sharing

Among the various things I wanted to configure in my Windows-based virtual server was the file system sharing through the internal LAN, so that servers and VPN users (the system administrators) could access the shared folders using the Windows File Explorer. In order to do that, I had to perform a number of tasks, such as:

- Share some folders between the various Windows servers, thus enabling their File and Printer Sharing feature on their NIC interface(s).

- Add a couple Firewall rules on pfSense to allow traffic from both the LAN and OpenVPN interfaces to any LAN destination.

- Open the Windows Firewall ports for file sharing (135-139 and 445 TCP/UDP), which can be easily done by enabling the File and Printer Sharing (SMB-In) and File and Printer Sharing over SMBDirect (iWARP-In) rules, already present in the Windows Defender Firewall but disabled by default.

That was all it took... or so I thought before being greeted by the following errors when I tried to access a shared folder:

An error occurred while connecting to address \\<LAN-IP>\<SHARED-FOLDER>\.

The operation being requested was not performed because the user has not been authenticated.

You can't access this shared folder because your organization's security policies block unauthenticated guest access. These policies help protect your PC from unsafe or malicious devices on the network.

I've had the above messages alternating on the two Windows machines, without being able to connect to the shared folders.

These errors was caused by a non-trivial configuration issue that took some valuable time to fix; the second message greatly helped me to understand where the problem actually was since it led me to check the local group policies. In very short terms, there was an issue with the Windows default Guest account, which was enabled and also blocked from accessing the network.

Conclusion

That's it, at least for now: I hope that this post will help other System Administrators that are looking for a way to securely host their virtual infrastructure on the Aruba Cloud Pro environment.