Table of Contents

In the latest few years the world wide web has experienced an exponential growth of hackers, malwares, ransomwares and other malicious software or parties which is constantly trying to find a way to steal our personal data: given this scenario, it goes without saying that securing your data became one of the most important tasks that we should prioritize, regardless of the role that we usually play. The general (and urgent) need to prevent unauthorized access to personal, sensitive and/or otherwise critical informations is something that should be acknowledged by everyone - end-users, service owners, servers administrators and so on: the differences are mostly related to what we need to protect and how we should do that.

Needless to say, the act of choosing the proper way to protect our data is often subsequent to a well-executed risk assessment followed-up by a costs-benefits analysis, which is a great approach to help us finding the appropriate technical and organisational measures to implement in our specific scenario. This is also the proper way to act according to the General Data Protection Regulation (GDPR), as stated in the Art. 32 - Security of Processing:

Taking into account the state of the art, the costs of implementation and the nature, scope, context and purposes of processing as well as the risk of varying likelihood and severity for the rights and freedoms of natural persons, the controller and the processor shall implement appropriate technical and organisational measures to ensure a level of security appropriate to the risk [...]

Here's a list of the most common technical and organisational measures to ensure the protection and security of the data nowadays:

- Access control: Protect all physical access to your server, client and/or data rooms with keys, chip cards, walls, lockers, alarms and the likes.

- Minimization: Ensure that all the authorized parties can access only the data specifically related to their specific tasks and/or authorization without being allowed to see anything else.

- Integrity: Protect your data from accidental loss, destruction or damage using appropriate countermeasures (fire/flood sensors, Disaster Recovery and the likes).

- Pseudonymisation: Replace user-related data by random, anonymous blocks of text, so that the owner will still be able to retain the entries (for statistical purposes) and, at the same time, stripping them from any personal info.

- Encryption in-transit: Ensure that the data is always transmitted using strong in-transit encryption standards (SSL/TLS certificates) and through secure connections: this also applies to any kind of website and web-based service containing forms, login screens, upload/download capabilities and so on.

- Encryption at-rest: Protect your local data storage units (including those used by servers and desktop & mobile clients) with a strong at-rest encryption standard; ensure that the data stored in SaaS and cloud-based services are also encrypted at-rest.

- Confidentiality: Prevent unauthorized or unlawful processing by implementing concepts such as separation of concerns & separation of duties, enforcing password policies, and so on.

- Recoverability: Ensure that all the relevant data is subject to regular backups and also be sure to regularly check them to ensure that the data can be successful retrieved.

- Evaluation: Submit the whole system to regular technical reviews, third-party audits, adopt an effective set of security indicators, and so on.

In this post we're going to talk about two of these technical measures: Encryption in-transit and Encryption at-rest, leaving the other topics for further articles.

Introduction: the Three Stages of Digital Data

The first thing we should do is to enumerate how many "states" digital data can actually have, and be sure to understand each one of them:

- At rest: this is the initial state of any digital data: in very short terms, this indicates the data that is stored somewhere without being used by and/or transmitted to anyone (including software, third-parties, human beings, and so on). From local Hard Drives to Network Attached Storages, from USB pendrives to mobile devices, from system folders to database servers, any physical and logical storage system, unit or device is meant to be used to contain data at rest... at least for a while.

- In transit: also known as "in motion". This is relative to the data which is being transmitted somewhere to somewhere else. It's worth noting that the concept of "data transfer" can take place between any number of parties, not limiting to just two (the sender and a receiver): for example, when we transfer a file from our desktop PC to our laptop using our LAN, we're basically performing a data transfer involving a single party (us); conversely, when submitting a transaction to a distribuited database, such as a blockchain, we're enforcing a data transfer between an indefinite amount of parties (the whole blockchain nodes).

- In use: whenever the data is not just being stored passively on a hard drive or external storage media, but is being processed by one or more applications - and therefore in process of being generated, viewed, updated, appended, erased, and so on - it's intended to be "in use". It goes without saying that data in use is susceptible to different kinds of threats, depending on where it is in the system and who is able to access and/or use it. However, the encryption of data in-use is rather difficult to pull off, since it would most likely cripple, hinder or crash the application which is actually accessing it: for this very reason, the best way to protect the data in use is to ensure that the application will take care of such job by adopting the most secure development and implementation patterns within its source code.

The sum of the three statements explained above is called "the Three Stages of Digital Data": now that we got the gist of them, we're ready to dive deep into the encryption topics.

Data Encryption at-rest

From the definition of "at rest" given above we can easily understand how this kind of data is typically in a stable state: it is not traveling within the system or network, and it is not being acted upon by any application or third-party. It's something that has reached a destination, at least temporarily.

Reasons to use it

Why should we even encrypt those data, then? Well, there are a number of good reasons for doing so: let's take a look at the most significant ones.

Physical theft

If our device is stolen, the encryption at-rest will prevent the thief from being immediately able to access our data. Sure, it can still try to decrypt it using brute-force or other encryption-cracking methods, but this is something that will take a reasonable amount of time: we should definitely be able to pull off the adeguate countermeasures before that happens, such as: changing the account info he might be able to see or somewhat use via existing browsers password managers, login cookies, e-mail clients accounts and so on; track our device and/or issue a "erase all data" using our Google or Apple remote device management services; and so on.

Logical theft

If our PC, website or e-mail account gets hacked by a malicious user or software, the encryption at-rest will make the offender unable to access our data - even when stolen or downloaded: it's basically the same scenario of physical theft, except it's way more subtle because most users (or administrators) won't even be aware of it.

Here's another good chance to remember the terrific words uttered by John T. Chambers, former CEO of Cisco, Inc.:

There are two types of companies: those that have been hacked, and those who don't know they have been hacked.

Considering the current state of the internet nowadays and the over-abundance of malwares and measurable hacking attempts, the same statement can be said for any end-user possessing a web-enabled device: 100% guarranteed.

Human errors

Let alone the physical and/or logical thefts, there are a lot of other scenarios where data encryption at-rest could be a lifesaver: for example, if we lost our smartphone (and someone finds it); or if we make a mistake while assigning permissions, granting to unauthorized users (or customers) access to files/folders/data they shouldn't be able to see; or if we forget our local PC or e-mail password in plain sight, thus allowing anyone who doesn't feel like respecting our privacy to take a look at our stuff; and the list could go on for a while.

How can it help us

To summarize all that, we could answer our previous questions with a single line by saying that encrypting our at-rest data could help us to better deal with a possible Data Breach.

It won't help us to prevent that from happening - which is mostly a task for firewalls, antiviruses, good practices and security protocols - but will definitely give us the chance (and the time) to setup the appropriate countermeasures, hopefully minimizing the overall damage done by any possible leak.

How to implement it

Implementing a Data Encryption at-rest security protocol might be either easy or hard, depending on the following factors:

- which physical and logical data sources/storages we want (or have) to protect: physical sources include Hard Disks, NAS elements, smartphones, USB pendrives, and so on, while logical sources include local or remote databases, cloud-based assets, virtualized devices, and so on;

- who needs to have access to these data: human beings (local or remote users or other third-parties connecting to us), human-driven software (such as MS Word) or automatic processes or services (such as a nightly backup task);

- how much we're willing to sacrifice in terms of overall performance and/or ease of access to increase security: can we ask to all our local (and remote) users to decrypt these data before being able to access them? Should we use a password, a physical token or a OTP code? Can we make the encryption transparent enough to not hinder our external users and also to allow our software apps and tools to deal with the encrypted data whenever they'll need to deal with it?

Luckily enough, these factors are well-known by most at-rest encryption tools, which have been designed to protect our data without compromising the overall functionality of our environment:

- if we want to encrypt physical (or logical) Hard-Disk drives, we can use great software tools such as VeraCrypt (100% free) or AxCrypt (free version available); those who are using a Windows operating system, such as Windows 10 or Windows Server, can also use the built-in BitLocker Drive Encryption feature, without having to install any third-party software.

- if we need to protect our USB pendrives, we can either use the aforementioned tools or purchase a hardware-encrypted Flash Drive implementing fingerprint-based or password-based unlock mechanisms (starting from 20~30 bucks);

- if we would like to encrypt the data stored within a Database Management System, most of the DBMS available today provide native encryption techniques (InnoDB tablespace encryption for MySQL and MariaDB, Transparent Data Encryption for MSSQL, and so on);

- if we're looking for a way to securely store our E-Mail messages, we can easily adopt a secure e-mail encryption standard such as S/MIME or PGP (both of them are free): although these protocols are mostly related to in-transit encryption, since they do protect data mostly meant to be transferred to remote parties, as a matter of fact they are commonly used to perform a client-side encryption, which means that they protect the e-mail messages while they're still at-rest. Needless to say, since those message will most likely be sent, our destination(s) will also have to adopt the same standard to be able to read them.

Data Encryption in-transit

As the name implies, data in-transit should be seen much like a transmission stream: a great example of data in-transit is a typical web page we do receive from the internet whenever we surf the web. Here's what happens under the hood in a nutshell:

- We send a HTTP (or HTTPS) request to the server hosting the website we're visiting.

- The web server accepts our request, processes it by finding the (static or dynamic) content we've asked for, then sends it to us as a HTTP (or HTTPS) response over a given TCP port (usually 80 for HTTP and 443 for HTTPS).

- Our client, usually a web browser such as Google Chrome, Firefox or Edge, receives the HTTP(s) response, stores it on its internal cache and shows it to us.

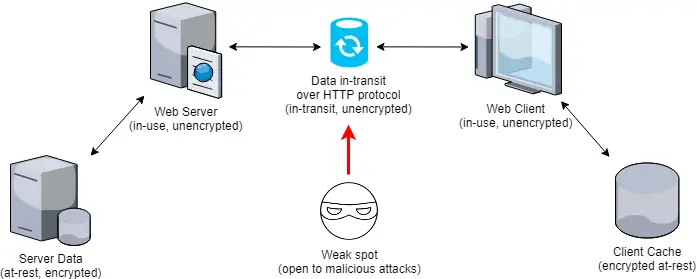

As we can see, there clearly is a data trasmission going on between the server and the client: during that trasmission, the requested data (the web page HTML code) becomes a flow that goes through least five different states:

- it starts at-rest (server storage),

- then changes to in-use (web server memory),

- then to in-transit (using the HyperText Transfer Protocol on a given TCP port),

- then again to in-use (web browser),

- and finally to at-rest (client cache).

Reasons to use it

Now, let's take for granted that both the server and client have implemented a strong level of data encryption at-rest: this means that the first and the fifth state are internally safe, because any intrusion attempt would be made against encrypted data. However, the third state - where the data is in-transit - might be encrypted or not, depending on the protocol the server and the client are actually using to transmit the data.

Here's what usually happens under the hood when the HTTP protocol is being used:

As we can see, the security issue is quite evident: when the web server processes the incoming request and transparently decrypts the requested data, the channel used to transfer it to the web client (HTTP) is not encrypted: therefore, any offending party that manages to successfully pull off a suitable attack (see below) could have immediate access to our unencrypted data.

How can it help us

If you're curious about which kind of attacks can be used against a unencrypted TCP-based transmission protocol such as HTTP, here's a couple of threats you should be aware of:

- Eavesdropping: a network layer attack that focuses on capturing small packets from the network transmitted by other computers and reading the data content in search of any type of information (more info here).

- Man-in-the-Middle: a tampering-based attack where the attacker secretly relays and/or alters the communication between two parties to make them believe they are directly communicating with each other (more info here).

Implementing proper encryption in-transit protocols to secure our critical data transfer endpoints will definitely help us preventing these kind of threats.

How to implement it

Implementing an effective encryption in-transit pattern is mostly a matter of sticking to a wide-known series of recommendations and best practices while designing the actual data transfer: which protocols to (not) use, which software to (not) adopt, and so on. For example:

- Whenever the transmitting device is reachable via web interface, web traffic should only be transmitted over Secure Sockets Layer (SSL) using strong security protocols such as Transport Layer Security (TLS): this applies to any web site and/or WAN-reachable service, including e-mail servers and the likes. As of today, the best (and easiest) way to implement TLS security and implement the encryption in-transit for any website is by obtaining a SSL/TLS HTTPS certificate: those can either be purchased from registered CA authorities (Comodo, GlobalSign, GoDaddy, DigiCert and their huge resellers/subsellers list) or auto-generated through a self-signing process, as we briefly explained in this post. Although self-signed certificates will grant the same encryption level of their CA-signed counterparts, they won't generally be trusted by the users as their browser clients won't be able to verify the good faith of the issuer identity (you), flagging your website as "untrusted": for this very reason, they should only be used on non-production (or non-publicly accessible) server/services.

- Any data transmitted over e-mail should be secured using cryptographically strong email encryption tools such as S/MIME or PGP, which we already covered when we talked about data encryption at-rest: although these protocols perform their encryption at client level (and therefore at-rest), they're also great to protect the asynchronous in-transit flow of an e-mail message.

- Any binary data should be encrypted using proper file encryption tools before being attached to e-mail and/or transmitted in any other way. Most compression protocols, including ZIP, RAR and 7Z, do support a decent level of password-protected encryption nowadays: using them is often a great way to add an additional level of security and reduce the attachment size at the same time

- Non-web transmission of text and/or binary data should also be encrypted via application level encryption, taking the following scenarios into account:

- If the application database resides outside of the application server, the connection between the database and application should be encrypted using FIPS compliant cryptographic algorithms.

- Whenever application level encryption is not available, implement network level encryption such as IPSec or SSH tunneling, and/or ensure that the transmission itself is performed using authorized devices operating within protected subnets with strong firewall controls (VPN and the likes).

The following table shows some examples of the insecure network protocols you should avoid and their secure counterparts you should use instead:

| Transfer Type | What to avoid (insecure) | What to use (secure) |

|---|---|---|

| Web Access | HTTP | HTTPS |

| E-Mail Servers | POP3, SMTP, IMAP | POP3S, IMAPS, SMTPS |

| File Transfer | FTP, RCP | FTPS, SFTP, SCP, WebDAV over HTTPS |

| Remote Shell | telnet | SSH2 |

| Remote Desktop | VNC | radmin, RDP |

End-to-End Encryption

Encryption in-transit is really helpful, but it has a major limitation: it does not guarantee that the data will be encrypted at its starting point and won't be decrypted until it's in use. In other words, our data might still be predated by occasional and/or malicious eavesdroppers, including internet providers, communication service providers and whoever could access the cryptographic keys needed to decrypt the data while in-transit.

Overcoming such limitation is possible thanks to End-to-End Encryption (E2EE), a communication paradigm where only the communicating end parties - for example, the users - can decrypt and therefore read the messages. End-to-end encrypted data is encrypted before it’s transmitted and will remain encrypted until it’s received by the end-party.

Reasons to use it

To better understand how end-to-end encryption superseeds in-transit encryption in terms of resilience to eavesdroppers, let's imagine the following scenarios.

- Suppose that a third party manages to plant their own root certificate on a trusted certificate authority: such action could theoretically be performed by a state actor, a police service or even a malicious/corrupted operator of a Certificate Authority. Anyone who is able to do this could successfully operate a man-in-the-middle attack on the TLS connection itself, eavesdropping on the conversation and possibly even tampering with it. End-to-end encrypted data is natively resilient to this kind of attack, because the encryption is not performed at the server level.

- End-to-end encryption can also increase the protection level among the user processes spawned by an operating system. Do you remember the recent CPU flaws called SPECTRE and MELTDOWN? Both of them allowed a malicious third-party (such as a rogue process) to read memory data without being authorized to do so. End-to-end encryption could avoid such scenario as long as the encryption is performed between user process (as opposed to the kernel), thus preventing any unencrypted data from being put in the memory.

How it can help us

End-to-end encryption is the most secure form of communication that can be used nowadays, as it ensures that only you and the person you're communicating with can read what is sent, and nobody in between, not even the service that actually performs the transmission between peers. Various end-to-end encryption implementations are already effective on most messaging apps and services (including Whatsapp, LINE, Telegram, and the likes). In a typical "communication app" scenarios, the messages are secured with a lock, and only the sender and the recipient have the special key needed to unlock and read them: for added protection, every message is automatically sent with its own unique lock and key.

How to implement it

End-to-end encryption can be used to protect anything: from chat messages, files, photos, sensory data on IoT devices, permanent or temporary data. We can choose what data we want to end-to-end encrypt. For example, we might want to keep benign information related to a chat app (like timestamps) in plaintext but end-to-end encrypt the message content.

- Every user has a private & public key which the software has to generate on the users’ device at signup or next time they log in.

- The user’s public key is published to a public place (such as a REST-based key management service): this is required for users to find each other’s public keys and be able to encrypt data to each other.

- The user’s private key remain on the user’s device, protected by the operating system’s native key store (or other secure stores).

- Before sending a chat message or sharing a document, the app encrypts the contents using the recipient’s public key (client-side).

Conclusion

Our journey through the various encryption paradigms is complete: we sincerely hope that this overview will help users and system administrators to increase their awareness of the various types of encryption available today.