Table of Contents

DevOps is a software development methodology that combines software development (Dev) with information technology operations (Ops), blending these two worlds in the entire service lifecycle: from the initial product design, through the whole development process, and to production support.

In this post, after a brief introduction explaining what the whole DevOps concept is about, we'll try to shed some light over the overall methodology, the consecutive stages defining its lifecycle, and the various best practices that this brand-new concept can bring to the existing companies and software development teams. If you want to further increase your knowledge, you can also try out one of the many DevOps Online Training courses available on the web nowadays.

What DevOps actually is

Although there is not a uniquely accepted definition for the term "DevOps", there's a general consensus on depicting it as "a set of practices intended to reduce the time between committing a change to a system and the change being placed into normal production, while ensuring high quality". This definition, firstly given by Len Bass, Ingo Weber, and Liming Zhu, correctly frames the theoretical and academic aspects. However, in a more widespread context, the term is frequently used to define a brand-new paradigm to be used in the whole development cycle of an IT product, from the idea to the post-release phases.

Main goal(s)

The whole purpose of adopting a DevOps approach revolves around the following objectives:

- Faster and Continuous Development

- Faster and Reliable Quality Assurance

- Faster and Secure Deployment

- Faster and Predictable Time to Market

- Faster and Frequent Releases

Most of these goals are achieved through the use of iterative patterns and continuous feedback between end-users, customers, product owners, development, quality assurance, and production engineers.

As we can see, the "faster" achievements are easily the primary drivers here: however, for the most part the speed increase is obtained as the result of the best practices adopted to ensure the subsequent goal. To put it in other words, we could say that the speed factor is often the most measurable benefit that comes from switching to a DevOps way of (re)thinking the development process, following the golden principle that working better will also allow us to do our job faster in the long term.

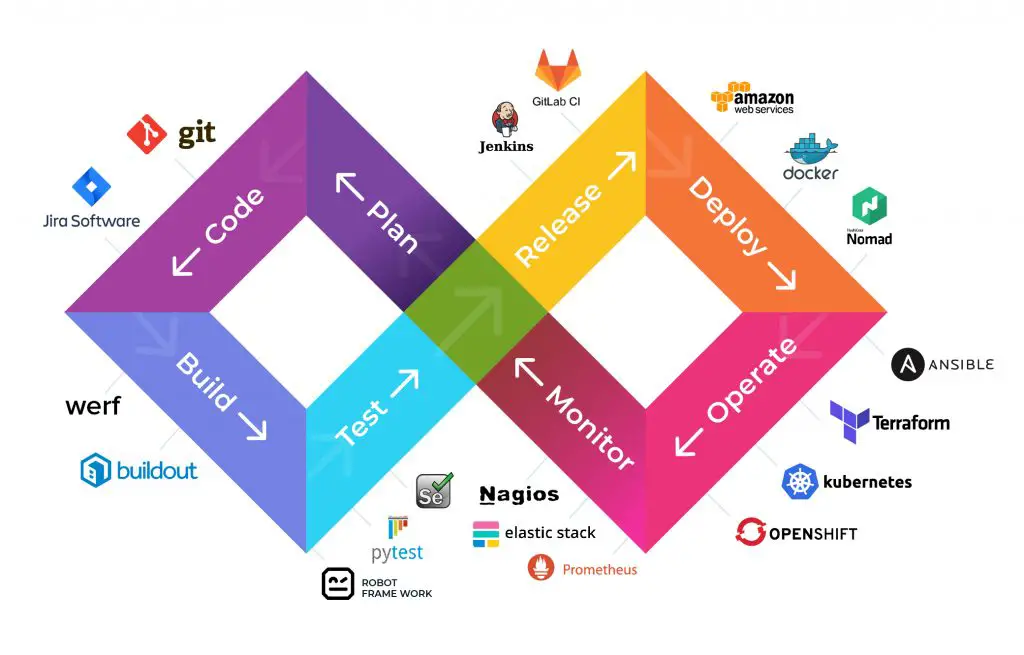

DevOps Toolchains

As DevOps is intended to be a cross-functional mode of working, meaning that it assumes the use of multiple sets of tools rather than a single one. Each of these sets, often called toolchains, is expected to fit into one or more categories. Each category reflects one of the various key aspects (or "phases") of the design, development and/or delivery processes.

Here's a list of the most important toolchains, grouped into their respective subsequent categories:

- Planning: task management, schedules.

- Coding: code development and review, source code management tools, code merging.

- Building: continuous integration tools, version control tools, build status.

- Testing: continuous testing tools that provide quick and timely feedback on business risks, performance measurement.

- Packaging: artifact repository, application pre-deployment staging.

- Releasing: change management, release approvals, release automation.

- Operating: infrastructure installation, configuration and management, infrastructure changes (scalability), infrastructure as code tools, capacity planning, capacity & resource management, security check, service deployment, high availability (HA), data recovery, log/backup management, database management.

- Monitoring: service performance monitoring, log monitoring, applications performance monitoring, end-user experience, incident management.

It goes without saying that each toolchain consists of a set of valuable tools: for example, continuous integration could be addressed with the joint use of Jenkins, Gitlab and Bitbucket pipelines; infrastructure as code can be dealt with using Terraform, Ansible, and Puppet; and so on.

The DevOps Lifecycle

If we take the categories in which we splitted the toolchains into and line them together, we can determine the DevOps product lifecycle:

Planning > Coding > Building > Testing > Packaging > Releasing > Operating > Monitoring

Which can further be "simplified" in the following ways:

Planning > Continuous Integration > Continuous Deployment > Monitoring

Planning > CI / CD > Monitoring

Some diagrams use Configuring instead of Operating, others talks about Release and Deploy instead of Packaging and Releasing, others encompasses the Testing and Packaging phases into the Continuous Integration container concept (see below), and so on: however, let alone some minimal semantic differences, the overall story is all there.

As we can see, the DevOps phases are more accurate and specific of the typical product phases identified by most product development frameworks (such as Scrum, Kanban and so on): the development phase has been split between coding and building to emphasize the major differences between writing the code and consolidate it; the deployment phase is divided into packaging, releasing and configuring, enforcing the adoption of modern concepts such as reusable resources, shared inventories, risk prevention strategies and DRY approaches. The testing and monitoring phases are also playing a major role there, as we'll be able to see in a short while.

If we would try to merge the various DevOps phases within a smaller set of "typical" tasks, we could shrink the lifecycle in the following way:

Design > Development > Testing > Deployment > Monitoring

Which is nothing but a more concise and general way of saying the same things.

Best Practices

Let's now try to share some light upon the best practices that distinguish the DevOps methodology.

Continuous Development

The DevOps lifecycle considers the development phase a continuous streams of consecutive iterations with no halt: the entire development process is divided into smaller development sprints with frequent, rapid release cycles. The frequent iterations have the purpose of minimizing any opportunity for bottlenecks to form in the delivery pipeline: whenever a change is ready to move through the pipeline and the pipeline can receive it, it should be deployed as soon as possible.

Behaviour-Driven Development

Behavior-driven development (BDD), to not be confusing with Test-driven development (TDD) which is an entirely different thing, is an approach where you specify and design an application by describing its behavior from the outside. This involves having high level discussions using specific, realistic examples. Stakeholders tell you how they expect the system to behave, so that you would be able to turn those expectations into acceptance criteria to drive out what you need to develop.

Continuous Integration

Continuous Integration is a methodology that involves frequent integration of code into a shared repository: the integration may occur several times a day, verified by automated test cases and a build sequence. For some DevOps enthusiasts this concept encompasses the Testing and Packaging phases, to the point that is often used as a synonym/container for these two: we don't follow such approach, as we rather consider CI a development practice which mostly takes place within the building phase: however, as we said earlier, it's often more a semantic debate rather than a conceptual one.

Highly-Efficient Testing

It goes without saying that the only thing that can ensure that a change (i.e. a new development block) is ready to move through the pipeline is the testing phase, where a dedicated toolchain of testing tools - such as Selenium, Junit, and so on - should be used to remove the bugs while testing the developed software and to ensure there are no new and/or regression flaws: if you know the test-driven development (TDD) principles, you would be happy to know that such approach can be definitely used in DevOps... as long as you are able to implement it in a way that it won't hinder or consistently slow down your development cycles.

Unfortunately, from a DevOps perspective, testing manually means introducing avoidable delays into the pipeline. How can we avoid that? The most efficient solution is to use a toolchain which provides automated testing features, at least to some extents. Luckily enough, there are various tools that do just that: Jarvis, for example, is a JavaScript unit testing platform that can be configured to pull off a build attempt and a battery of automated tests each and every time a developer checks in their code into the version control system. Whenever the build or any tests fail, the whole teams receives a notification, thus becoming aware of the issue almost in real-time.

It's worth noting that the automated testing approach can be applied to all code, not just application code: for example, all the infrastructure-related scripts required for installing new devices and/or deployment environments should be also treated as code, checked in, and tested automatically.

Automated Deployment

Manual deployment is often error-prone and slow: these two qualities are definitely against the whole DevOps approach. To minimize human errors, automated deployment is almost always the way to go. You want to be able to quickly and reliably back the change out if there are problems, so you'll need to have a tested rollback plan for each change, whether that means reversing a change, or toggling a new feature off.

Behaviour-Driven Development

Another important approach of the DevOps best practices follows a behavior-driven development (BDD) instead, an approach where you specify and design an application by describing its behavior from the outside.

Continuous Feedback

Most, if not all of the DevOps goals are achieved thanks to a strong communication level which make extensive use of continuous feedback between end-users, customers, product owners, development, quality assurance, and production engineers.

Infrastructure as Code

I (IaC) is the process of managing and provisioning computer data centers through machine-readable definition files (scripts or declarative definitions) rather than through physical hardware configuration activities or through interactive configuration tools. This is a typical configuration mode of cloud computing environments, in particular for configuring Infrastructure as a Service (IaaS) environments.

The IaC approach can use both scripts and declarative definitions, rather than manual processes, but the term is more often used to promote declarative approaches. It is an approach that has spread in recent years as a result of the need to create flexible environments, a requirement that often leads to the adoption of the DevOps paradigm.

Conclusion

That's it, at least for now: we hope that this post will be useful for those who want to know more about the DevOps methodology and find a way to properly implement it within their company or team.